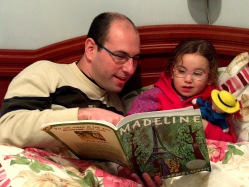

Daddy, where do statistics come from? #MRX

Well, my little one, if you insist. Just one more bedtime story.

Well, my little one, if you insist. Just one more bedtime story.

A long, long time ago, a bunch of people who really loved weather and biology and space and other areas of natural science noticed a lot of patterns on earth and in space. They created neato datasets about the weather, about the rising and setting of the sun, and about how long people lived. They added new points to their datasets everyday because the planets always revolved and the cells always went in the petri dish and the clouds could always be observed. All this happened even when the scientists were tired or hungry or angry. The planets moved and the cells divided and the clouds rained because they didn’t care that they were being watched or measured. And, the rulers and scales worked the same whether they were made of wood or plastic or titanium.

Over time, the scientists came up with really neat equations to figure out things like how often certain natural and biological events happened and how often their predictions based on those data were right and wrong. They predicted when the sun would rise depending on the time of year, when the cells would divide depending on the moisture and oxygen, and when the clouds would rain depending on where the lakes and mountains were. This, my little curious one, is where p-values and probability sampling and t-tests and type 1 errors came from.

The scientists realized that using these statistical equations allowed them to gather small datasets and generalize their learnings to much larger datasets. They learned how small a sample could be or how large a sample had to be in order to feel more confident that the universe wasn’t just playing tricks on them. Scientists grew to love those equations and the equations became second nature to them.

It was an age of joy and excitement and perfect scientific test-control conditions. The natural sciences provided the perfect laboratory for the field of statistics. Scientists could replicate any test, any number of times, and adjust or observe any variable in any manner they wished. You see, cells from an animal or plant on one side of the country looked pretty much like cells from the the same animal or plant on the other side of the county. It was an age of probability sampling from perfectly controlled, baseline, factory bottled water.

In fact, statistics became so well loved and popular that scientists in all sorts of fields tried using them. Psychologists and sociologists and anthropologists and market researchers started using statistics to evaluate the thoughts and feelings of biological creatures, mostly human beings. Of course, thoughts and feelings don’t naturally lend themselves to being expressed as precise numbers and measurements. And, thoughts and feelings that are often not understood and are often misunderstood by the holder. And, thoughts and feelings aren’t biologically determined, reliable units. And worst of all, the measurements changed depending on whether the rulers and scales were made of English or Spanish, paper or metal, or human or computer.

Sadly, these new users of statistics grew to love the statistical equations so much that they decided to ignore that the statistics were developed using bottled water. They applied statistics that had been developed using reliable natural occurrences to unreliable intangible occurrences. But they didn’t change any of the basic statistical assumptions. They didn’t redo all the fundamental research to incorporate the unknown, vastly greater degree of random and non-randomness that came with measuring unstable, influenceable creatures. They applied their beloved statistics to pond water, lake water, and ocean water. But treated the results as though it came from bottled water.

So you see, my dear, we all know where statistics in the biological sciences come from. The origin of probability sampling and p-values and margins of error is a wonderful story that biologists and chemists and surgeons can tell their little children.

So you see, my dear, we all know where statistics in the biological sciences come from. The origin of probability sampling and p-values and margins of error is a wonderful story that biologists and chemists and surgeons can tell their little children.

One day, too, perhaps psychologists and market researchers will have a similar story about the origin of psychological statistics methods to tell their little ones.

The end.

Which of these statistical mistakes are you guilty of? #MRX

On the Minitab Blog, Carly Barry listed a number of common and basic statistics errors. Most readers would probably think, “I would never make those errors, I’m smarter than that.” But I suspect that if you took a minute and really thought about it, you’d have to confess you are guilty of at least one. You see, every day we are rushed to finish this report faster, that statistical analysis faster, or those tabulations faster, and in our attempts to get things done, errors slip in.

Number 4 in Carly’s list really spoke to me. One of my pet peeves in marketing research is the overwhelming reliance on data tables. These reports are often hundreds of pages long and include crosstabs of every single variable in the survey crossed with every single demographic variable in the survey. Then, a t-test or chi-square is run for every cross, and carefully noted for which differences is statistically significant. Across thousands and thousands of tests, yes, a few hundred are statistically significant. That’s a lot of interesting differences to analyze. (Let’s just ignore the ridiculous error rates of this method.)

Number 4 in Carly’s list really spoke to me. One of my pet peeves in marketing research is the overwhelming reliance on data tables. These reports are often hundreds of pages long and include crosstabs of every single variable in the survey crossed with every single demographic variable in the survey. Then, a t-test or chi-square is run for every cross, and carefully noted for which differences is statistically significant. Across thousands and thousands of tests, yes, a few hundred are statistically significant. That’s a lot of interesting differences to analyze. (Let’s just ignore the ridiculous error rates of this method.)

But tell me this, when was the last time you saw a report that incorporated effect sizes? When was the last time you saw a report that flagged the statistically significant differences ONLY if that difference was meaningful and large? No worries. I can tell you that answer. Never.

You see, pretty much anything can be statistically significant. By definition, 5% of differences are significant. Tests run with large samples are significant. Tests of tiny percents are significant. Are any of these meaningful? Oh, who has time to apply their brains and really think about whether a difference would result in a new marketing strategy. The p-value is all too often substituted for our brains. (Tweet that quote)

It’s time to redo those tables. Urgently.

Read an excerpt from Carly’s post here and then continue on to the full post with the link below.

Statistical Mistake 4: Not Distinguishing Between Statistical Significance and Practical Significance

It’s important to remember that using statistics, we can find a statistically significant difference that has no discernible effect in the “real world.” In other words, just because a difference exists doesn’t make the difference important. And you can waste a lot of time and money trying to “correct” a statistically significant difference that doesn’t matter.

via Common Statistical Mistakes You Should Avoid.

Related articles

- Are There Perils in Changing the Way We Sample our Respondents by Inna Burdein #CASRO #MRX (lovestats.wordpress.com)

- 11 signs that you don’t have a research objective #MRX (lovestats.wordpress.com)

- Do Google Surveys use Probability Sampling? #MRX #MRMW (lovestats.wordpress.com)

Your significant statistical test proves nothing #AAPOR

In quite a few presentations over the last few days I’ve heard people make claims like

– my regression showed that women are more likely to…

– my factor analysis showed there are three groups…

Big problem. Tests prove nothing. Statistical tests give you an idea of the likelihood a bunch of numbers have a distribution due to chance. That’s it. Numbers. Pretty numbers.

The interpretation you make of the numbers, well that’s a completely different story. Everyone will interpret data just a little bit differently. Significant or not.

Other Posts

I poo poo on your significance tests #AAPOR #MRX

What affects survey responses?

– color of the page

– images on the page

– wording choices

– question length

– survey length

– scale choices

All of these options, plus about infinity more, mean that confidence intervals and point estimates from any research are pointless.

And yet, we spew out out significance testing at every possible opportunity. You know, when sample sizes are in the thousands, even the tiniest of differences are statistically significant. Even meaningless differences. Even differences caused by the page colour not the question content.

So enough with the stat testing. If you don’t talk about effect sizes and meaningfulness , then I’m not interested.

Other Posts

Have we been doing control groups all wrong? #MRX

Every time we test a new subject line, a new survey question, a new image, a new way-cool-and-so-much-better alternative, we always use a control group. That way, when we see the results from the test group, we can immediately see whether it was better, worse, or the same as the way we usually do things.

But let’s take a step back for a minute and remember how “random” sampling and probability works. Statistics tell us that even superior quality research designs are plagued by random chance occurences. That’s why when we report numbers, we always put a confidence interval around them, say 3 or 4 points. And then on top of that, we have to remember that five per cent of the time, the number we see will be horribly different from reality. Sadly, we can never know whether the number we’ve found is a few points away from reality or 45 points away from reality.

The only way to know for sure, or at least, nearly for sure, is to re-run the test 19 times. To hit the 20 times that allow us to say “19 times out of 20.”

And yet, we only ever use one single control group before declaring our test group a winner, or loser. One control group that could be wrong by 3 points or 43 points.

So here’s my suggestion. Enough with the control group. Don’t use a control group anymore. Instead, use control groups. Yes, plural. Use two control groups. Instead of waiting a week and redoing the test again, which we all know you haven’t got the patience for, do two separate control groups. Launch the control survey twice using two completely separate and identical processes. Not a split-test or hold-back sample. Build the survey. Copy it. Launch full sample to both surveys.

Now you will have a better idea of the kind of variation to expect with your control group. Now you will have a better idea of how truly different your test group is.

No, it’s not 19 repetitions by 19 different researchers in 19 different countries with 19 different surveys but it’s certainly better than assuming your control group is the exact measure of reality.

Other Posts

Stop wasting time on significance tests #MRX

Have you ever conducted a research project and NOT done any significance tests?

Have you ever run a series of significance tests and wondered why you bothered to do them?

Let’s think about why we do research projects and why we do significance testing. First of all, research isn’t worth doing unless the methodology is designed very carefully with appropriate sample sizes, great questions, and high standards of data quality. It should be designed with very clear research objectives in mind, with potential outcomes carefully thought out, with potential action steps carefully thought out. Quality research studies are conducted with measures of success clearly outlined before the research is carried out.

Let’s think about why we do research projects and why we do significance testing. First of all, research isn’t worth doing unless the methodology is designed very carefully with appropriate sample sizes, great questions, and high standards of data quality. It should be designed with very clear research objectives in mind, with potential outcomes carefully thought out, with potential action steps carefully thought out. Quality research studies are conducted with measures of success clearly outlined before the research is carried out.

If all of these things are in place, then I challenge you to consider why you even bother with significance testing. A research study with clearly thought out objectives should be accompanied by specific hypotheses that lead to specific outcomes. Your well planned out study determined that Product A must generate scores that are at least X% better than Product B before Product A is identified as a success. If it does, then it makes sense to proceed with launching Product A.

So if you already know that you are seeking improvements of size X%, there is zero reason to conduct significance tests. Your measure of success has been predetermined. You already know that, based on your high quality research design, the difference is large enough to warrant moving forward with the launch.

In other words, if you need to run a signficance test to determine if a difference is important, then the difference is for sure not important at all. Significance tests aren’t required.

Related articles

- Do Google Surveys use Probability Sampling? #MRX #MRMW (lovestats.wordpress.com)

- I hate social media research because: It’s not a rep sample #2 #MRX (lovestats.wordpress.com)

- That’s Me, The New Editor-In-Chief of MRIA’s Vue Magazine #MRX (lovestats.wordpress.com)

- SPSS Releases A New Extraordinary Version #MRX (lovestats.wordpress.com)

Radical Market Research Idea #6: Don’t calculate p-values #MRX

p-values are the backbone of market research. Every time we complete a study, we run all of our data through a gazillion statistical tests and search for those that are significant. Hey, if you’re lucky, you’ll be working with an extremely large sample size and everything will be statistically significant. More power to you!

But what if you didn’t calculate p-values? What if you simply looked at the numbers and decided if the difference was meaningful? What if you calculated means and standard deviations, and focused more on effect sizes and less on p<0.05? Instead of relying on some statistical test to tell you that you chose a sample size large enough to make the difference significant, what if you used your brain to decide if the difference between the numbers was meaningful enough to warrant taking a decision?

Effect sizes are such an underused, unappreciated measure in market research. Try them. You’ll like them. Radical?

Related articles

- Radical Market Research Idea #4: Make your spouse take your survey #MRX (lovestats.wordpress.com)

- Radical Market Research Idea #5: Drop the decimals #MRX (lovestats.wordpress.com)

- Radical Market Research Idea #1: Banish probability sampling #MRX (lovestats.wordpress.com)

Really Simple Statistics: 1-Tail and 2-Tail tests #MRX

Welcome to Really Simple Statistics (RSS). There are lots of places online where you can ponder over the minute details of complicated equations but very few places that make statistics understandable to everyone. I won’t explain exceptions to the rule or special cases here. Let’s just get comfortable with the fundamentals.

What are tails?

No, not these tails.

monosodium demondimum nasirkhan moneysaver67 from morguefile

The tails in statistics refer to the predictions we make about our research results and how we want to hedge our bets.

One tailed tests

One tailed tests are what you use when you have a specific guess. Men are taller than women. Women like chocolate more than men like chocolate. Roses smell nicer than tulips. It’s like putting all your eggs in one basket. Take a guess and hold yourself to it.

xandert from morguefile

Ideally, this is what you should be aiming for. You should have a prediction about what is going to happen before you conduct your research. You should do your homework and not just willy nilly see ‘what comes up significant.’

One tailed tests are advantageous because they give you a better chance of generating differences that turn out to be statistically significant, as long as, of course, your prediction turned out to be right.

Two tailed tests

Two tailed tests are used when you can’t make a guess. Will men or women eat more bread? Is basketball or soccer more fun? Do people spend more on coffee or on hot chocolate? In this case, you’re putting splitting your eggs between two baskets – maybe men but maybe women, maybe basketball but maybe soccer.

jdurham from morguefile

.

In reality, most of what we do in market research is based on two tailed tests. We don’t spend the time to develop specific hypotheses ahead of time. We wait to get the datatables and then search through hundreds of pages looking for whatever happens to be significant.

And that’s it! Really Simple Statistics!

Related Articles

Really Simple Statistics: p values #MRX

Welcome to Really Simple Statistics (RSS). There are lots of places online where you can ponder over the minute details of complicated equations but very few places that make statistics understandable to everyone. I won’t explain exceptions to the rule or special cases here. Let’s just get comfortable with the fundamentals.

What is a p value?

P value is a short form for probability value and another way of saying significance value. It refers to the chance that you are willing to take in being wrong. (I know, once in your life is too many times to be wrong.)

No matter how careful you are, random chance plays a part in everything. If you try to guess whether you’ll get heads or tails when you flip a coin, your chance of guessing correctly is only 50%. Half the time, you’ll flip tails even if you wanted to flip heads.

In research, we don’t like 50/50 odds. We instead only want to risk that 5% or 1% of our predictions are wrong. And, if you just picked 1% or 5%, you’ve just picked a peck of picked peppers. Whoops, I mean you’ve just picked a p value.

P values are almost always expressed out of 1. For example, a p value of 0.05 means you are willing to let 5% of your predictions be wrong. A p value of 0.1 means you are willing to let 10% of them be wrong. Don’t let that pesky decimal place fool you. A p value of 0.01 means 1% and a p value of 0.1 means 10%.

When you do a statistical test in software like SPSS or Systat, it will tell you the exact p value associated with your specific set data. For instance, it might indicate that the p value of your result is 0.035, or “Men are significantly taller than women, p=0.035.” That means there is a 3.5% chance that men are NOT actually taller than women and this result happened only because of random chance.

Really Simple Statistics!

Related Articles

- The forgotten side of segmentation

- Your survey questions are all wrong

- What sample size do I need?

- Why do people like marketing research surveys?

- Really Simple Statistics: Nominal Ordinal Interval and Ratio Numbers #MRX

- Really Simple Statistics: T-Tests #MRX

- Really Simple Statistics: What is Ratio Data #MRX (lovestats.wordpress.com)

- Really Simple Statistics: What is Nominal Data? #MRX (lovestats.wordpress.com)

- Really Simple Statistics: What is Ordinal Data? #MRX (lovestats.wordpress.com)

- Really Simple Statistics: What is Interval Data? #MRX (lovestats.wordpress.com)

Data Tables: The scourge of falsely significant results #MRX

Who doesn’t have fond thoughts of 300 page data tabulation reports! Page after page of crosstab after crosstab, significance test after significance test. Oh, it’s a wonderful thing and it’s easy as pie (mmmm…. pie) to run your fingers down the rows and columns to identify all of the differences that are significant. This one is different from B, D, F, and G. That one is different from D, E, and H. Oh, the abundance of surprise findings! Continue reading →